How to use Crawlers and indexing feature in Blogger for friendly SEO

Introduction about Crawling and Indexing

Getting Started

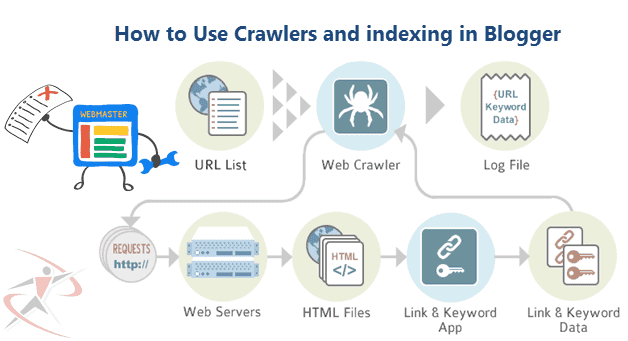

Automated website crawlers are powerful tools to help crawl and index content on the web.Search engines generally go through two main stages to make content available for users in search results: crawling and indexing.

Crawling is when search engine crawlers access publicly available webpages. In general, this involves looking at the webpages and following the links on those pages, just as a human user would. Indexing involves gathering together information about a page so that it can be made available ("served") through search results.

The distinction between crawling and indexing is critical. Confusion on this point is common and leads to webpages appearing or not appearing in search results. Note that a page may be crawled but not indexed; and, in rare cases, it may be indexed even if it hasn't been crawled. Additionally, in order to properly prevent indexing of a page, you must allow crawling or attempted crawling of the URL.

The methods described in this set of documents helps you control aspects of both crawling and indexing, so you can determine how you would prefer your content to be accessed by crawlers as well as how you would like your content to be presented to other users in search results.

In some situations, you may not want to allow crawlers to access areas of a server. This could be the case if accessing those pages uses the limited server resources, or if problems with the URL and linking structure would create an infinite number of URLs if all of them were to be followed.

Crawling is when search engine crawlers access publicly available webpages. In general, this involves looking at the webpages and following the links on those pages, just as a human user would. Indexing involves gathering together information about a page so that it can be made available ("served") through search results.

The distinction between crawling and indexing is critical. Confusion on this point is common and leads to webpages appearing or not appearing in search results. Note that a page may be crawled but not indexed; and, in rare cases, it may be indexed even if it hasn't been crawled. Additionally, in order to properly prevent indexing of a page, you must allow crawling or attempted crawling of the URL.

The methods described in this set of documents helps you control aspects of both crawling and indexing, so you can determine how you would prefer your content to be accessed by crawlers as well as how you would like your content to be presented to other users in search results.

In some situations, you may not want to allow crawlers to access areas of a server. This could be the case if accessing those pages uses the limited server resources, or if problems with the URL and linking structure would create an infinite number of URLs if all of them were to be followed.

Controlling crawling

The robots.txt file is a text file that allows you to specify how you would like your site to be crawled. Before crawling a website, crawlers will generally request the robots.txt file from the server. Within the robots.txt file, you can include sections for specific (or all) crawlers with instructions ("directives") that let them know which parts can or cannot be crawled.

Location of the robots.txt file

How to get robots.text file??? The robots.txt file must be located on the Googler Webmaster Website,where you had submited a Website or located on the root of the website host.

URL Example:-

URL Example:-

http://www.teezbuy.com/robots.txt

Custom robots.text code example

User-agent: Mediapartners-Google Disallow: User-agent: * Disallow: /search Allow: / Sitemap: http://www.your-site.com/sitemap.xml

Follow these steps to get Custom robots.text file:-

- Sign In to Google Webmaster Tool

- In Home tab Select your website or click "ADD A PROPERTY" button to add new website.

- Click website which you have added before.

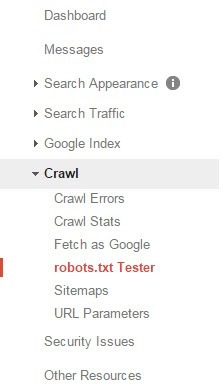

- In Dashboard Click "Crawl" then Select "robots.text Tester".As below in image.

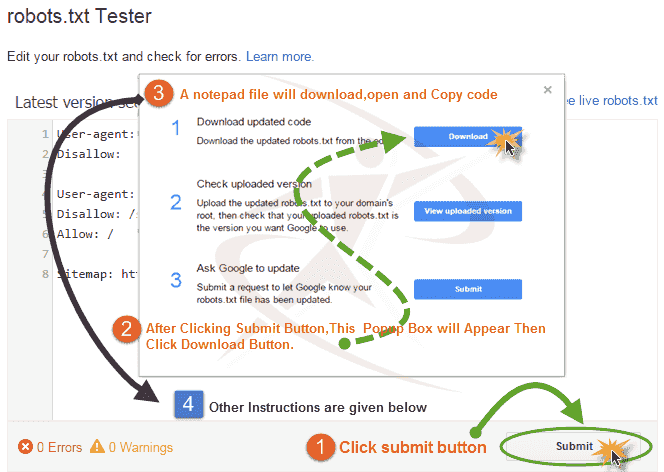

- Follow Instruction in Given Image

- After Clicking Download Button in "Popup" box a text file will download then copy this code.

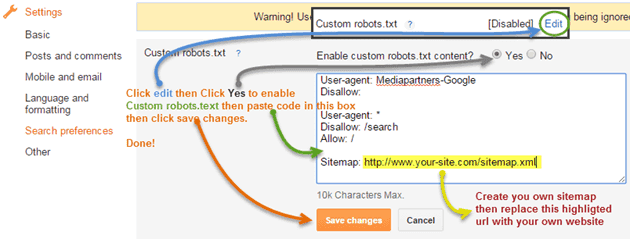

Submit robots.text to Blogger

- Copy the robots.text code then Login to your Blogger dashboard -> Select your blog -> Settings -> Search preferences -> Edit (custom robots.text ) -> Now, click "yes" to enable this option.

- Copy the robots.txt code (which we had downloaded before from Google webmaster website) paste in the given box and Click save changes.

Create Sitemap

Visit xml-sitemaps and Create sitemap for your website.Then add the sitemap to your Custom robots.text in blogger.

How to Set Custom Robots Header Tags

Warning! Use with caution. Incorrect use of these features can result in your blog being ignored by search engines.Custom robots header tags in blogger allows you to manage your blog regarding Homepage, Archive, Search pages and other posts. It will help you guide the Google crawlers not to index certain sections of blogger’s blog. And a single mistake in the settings of custom robots header tags can vanish your complete blog from the Search Engine.It is very important to handle the settings of Custom Robots Header Tags to increase the traffic of your blog.

In this tutorial I will describe how to use Custom robots header tags in blogger.Custom robots header tags are slightly different from Custom robots.text.Custom robots header tags are very important for blogger blog.

Inside Blogger settings under custom robots header tags, you will have the following options that I will describe here.

In this tutorial I will describe how to use Custom robots header tags in blogger.Custom robots header tags are slightly different from Custom robots.text.Custom robots header tags are very important for blogger blog.

Inside Blogger settings under custom robots header tags, you will have the following options that I will describe here.

- All: If you have selected this option for a page into your Blogger, it means there is no restrictions to robots for crawling and indexing to that page. This is also a default value for all pages and posts inside your Blogger, if you don't have enabled this option.

- Noindex: This is what we want to use custom header tags, but a mistake to choose this option can vanish your blog from the search engines. If you choose "noindex" to any page in your Blogger then then the robots can not crawl and index that page. It means you would not see this page into search engines.

- Nofollow: Let you have a post that contains internal and external links. By default, Google crawler will follow each links inside your posts and consider for rankings. If you don't wish to follow your link on a particular page or post, simply add nofollow robot meta tag on that page or choose this option in your Blogger.

- None: It means you are selecting both "noindex" and "nofollow".

- noarchive: Choosing this option will remove "cached" link from the search.

- nosnippet: It will remove the snippet (meta description) from the search result pages.

- noodp: Selecting this option will remove the meta data (title or snippet) from open directory projects like DMOZ.

- notranslate: It will tell to spiders not to offer translate into search engines pages.

- noimageindex: If this option selected, your post will remain in the search results but the images on that page will not show up in the search.

- unavailable_after: You also have an option to noindex a page after a specific time.

Custom Robots Header Tags Settings

Just follow the simple steps to make your custom robots header tags completely SEO friendly.

Login to your Blogger dashboard -> Select your blog -> Settings -> Search preferences -> Edit (under custom robots header tags) -> Now, click "yes" to enable this option.

Now, select the options as per the screenshot into your header tags, and finally click on "Save changes".

You have done!

Login to your Blogger dashboard -> Select your blog -> Settings -> Search preferences -> Edit (under custom robots header tags) -> Now, click "yes" to enable this option.

Now, select the options as per the screenshot into your header tags, and finally click on "Save changes".

You have done!

Conclusion

Blogger team is continuously working hard to make Blogger SEO friendly. We should use custom robots header tags to handle the crawling and indexing for the different section of Blogger.

How to use Crawlers and indexing feature in Blogger for friendly SEO

Reviewed by Sanaulllah Rais

on

22:51

Rating:

Reviewed by Sanaulllah Rais

on

22:51

Rating:

Reviewed by Sanaulllah Rais

on

22:51

Rating:

Reviewed by Sanaulllah Rais

on

22:51

Rating:

I really liked your Information. Keep up the good work. Good Escort Websites

ReplyDelete